BIP: Advancements and applications of wearable technology

Through hands-on projects, the course aims to explore real-world applications of wearable technology and telerobotics, emphasizing their innovative uses and impact.

Through hands-on projects, the course aims to explore real-world applications of wearable technology and telerobotics, emphasizing their innovative uses and impact.

The course will provide a hands-on, project-based approach where students work with data, wearable devices, and robotic systems to design, implement, and test interactive applications. Students will collaborate in interdisciplinary groups of 4-5 to develop practical solutions that address real-world challenges. Each group will work on a specific application of wearable technology or telerobotics, tailored to healthcare, arts, or HRI.

Most tasks will be done in teams, encouraging students to collaborate across disciplines such as engineering, computer science, and design. Although collaboration is key, students are expected to take on individual roles within the group, such as data analysis or hardware assembly, ensuring each member contributes effectively.

This course is designed to introduce students to the cutting-edge fields of wearable technology and telerobotics, with applications in healthcare (rehabilitation), the arts, and human-robot interaction (HRI). The content is structured to cover key theoretical concepts and practical applications, culminating in the development of functional prototypes by students.

Main topics addressed:

1. Wearable Technology and Remote Sensing: Integration of wearables and sensors for real-time data collection and monitoring across various fields.

2. Data Processing and Prototyping: Efficient data collection, processing, and system design to enhance wearable and remote sensing technologies.

3. Applications in Healthcare, Arts, and HRI: Wearable technology’s impact on healthcare, creative industries, and human-robot interaction, including digital twins and telerobotics.

By the end of this course, students will have gained both theoretical understanding and practical skills in the fields of wearable technology and telerobotics, specifically in their application to healthcare (rehabilitation), arts, and HRI. Upon completing this course, students will be able to:

During the first week, students will work remotely on the foundational aspects of the project, focusing on background research, theoretical issues, and prototype design. The goal is to prepare the groundwork for hands-on activities in Week 2.

The physical mobility part will be running from 17th to 21th February 2025 in Genoa (IT). The seminar’s focus is developing and applying wearable technology and telerobotics, particularly in healthcare rehabilitation, arts, and HRI. Students will collaborate in interdisciplinary groups of 4-5 to develop practical solutions that address real-world challenges in these fields.

Monday, 10/2/2025

Tuesday, 11/2/2025

Wednesday, 12/2/2025

Thursday, 13/2/2025

Friday, 14/2/2025

Monday, 17/2/2025

Tuesday, 18/2/2025

Wednesday, 19/2/2025

Thursday, 20/2/2025

Friday, 21/2/2025

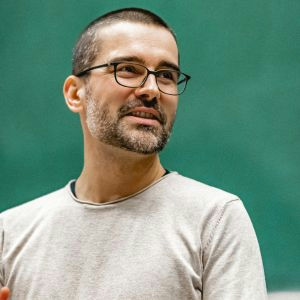

Fulvio Mastrogiovanni is an Associate Professor at the University of Genoa (UniGe), Italy, and a Researcher affiliated with the Italian Institute of Technology. He got a Laurea Degree and a Ph.D. from UniGe in 2003 and 2008, respectively. Fulvio is a member of the Board of the Ph.D. School in Bioengineering and Robotics, as well as the National Ph.D. School in Robotics. He is co-PI in the joint lab between UniGe and WeBuild SpA on topics related to robotics for construction. Fulvio was Visiting Professor at institutions in America, Europe, and Asia. He served as Head of Program for the international MSc in Robotics Engineering (part of the Erasmus+ EMARO and JEMARO programs) at UniGe, and Deputy Rector for International Affairs. Fulvio organized many international scientific events (RO-MAN, IROS, ERF conference series), and journal special issues, and will serve as General Chair of IAS 2025. Fulvio teaches courses in cognitive architectures, human-robot interaction, and artificial intelligence for robotics. Fulvio participated in many national and international funded projects and cooperates with research centers worldwide. He is the founder of Teseo and IOSR, two spinoff companies from UniGe, and he is advisor of established companies and startups on artificial intelligence solutions. Fulvio received awards for his scientific and technology transfer activities, including the Italian Young Innovator Award in 2021. Fulvio published more than 190 contributions, including 5 international patents.

Fulvio Mastrogiovanni is an Associate Professor at the University of Genoa (UniGe), Italy, and a Researcher affiliated with the Italian Institute of Technology. He got a Laurea Degree and a Ph.D. from UniGe in 2003 and 2008, respectively. Fulvio is a member of the Board of the Ph.D. School in Bioengineering and Robotics, as well as the National Ph.D. School in Robotics. He is co-PI in the joint lab between UniGe and WeBuild SpA on topics related to robotics for construction. Fulvio was Visiting Professor at institutions in America, Europe, and Asia. He served as Head of Program for the international MSc in Robotics Engineering (part of the Erasmus+ EMARO and JEMARO programs) at UniGe, and Deputy Rector for International Affairs. Fulvio organized many international scientific events (RO-MAN, IROS, ERF conference series), and journal special issues, and will serve as General Chair of IAS 2025. Fulvio teaches courses in cognitive architectures, human-robot interaction, and artificial intelligence for robotics. Fulvio participated in many national and international funded projects and cooperates with research centers worldwide. He is the founder of Teseo and IOSR, two spinoff companies from UniGe, and he is advisor of established companies and startups on artificial intelligence solutions. Fulvio received awards for his scientific and technology transfer activities, including the Italian Young Innovator Award in 2021. Fulvio published more than 190 contributions, including 5 international patents.

Monday, February 10th – h. 9:30 – 11:00

This lecture explores the central role of non-verbal communication in advancing human-robot interaction (HRI). By examining the mechanisms underlying embodied communication we investigate how robots can interpret human intentions, emotions, and states by analyzing subtle cues in motion and behavior. The discussion emphasizes how wearable sensors and sensorized objects can help robots to infer contextual information, anticipate actions, and adapt autonomously to dynamic human needs. Integrating these capabilities into robotic cognition fosters intuitive, transparent interactions, paving the way for human-centric and adaptable robotic systems. Through examples, this lecture demonstrates how robots can serve as valuable tools to deepen our understanding of human social dynamics, bridging the gap between cognition and technology.

Alessandra Sciutti is Tenure Track Researcher and head of the CONTACT (COgNiTive Architecture for Collaborative Technologies) Unit of the Italian Institute of Technology (IIT). She received her B.S. and M.S. degrees in Bioengineering and her Ph.D. in Humanoid Technologies from the University of Genova in 2010. After two research periods in the USA and Japan, in 2018, she was awarded the ERC Starting Grant wHiSPER, focused on the investigation of joint perception between humans and robots. She has published more than 100 papers and abstracts in international journals and conferences, coordinates the ERC POC Project ARIEL (Assessing Children Manipulation and Exploration Skills), and has participated in the coordination of the CODEFROR European IRSES project. She is currently Chief Editor of the HRI Section of Frontiers in Robotics and AI and Associate Editor for several journals, including the International Journal of Social Robotics, the IEEE Transactions on Cognitive and Developmental Systems, and Cognitive System Research. She is an ELLIS scholar and the corresponding co-chair of the IEEE RAS Technical Committee for Cognitive Robotics.

Monday, February 10th – h. 11:15 – 13:00

Tuesday, February 11th – h. 9:15 – 11:00

ROS is a robotic middleware that provides a collection of packages for common functionalities, including low-level control, hardware abstraction, and message passing. Due to its versatility, it has become a standard in robotics. This course will explore its most relevant features through practical examples, demonstrating how the ROS framework can assist in solving common robotics challenges. Additionally, the course will offer a general overview of ROS along with operational guidelines.

Carmine Recchiuto is Associate Professor at the University of Genoa. He has co-founded the RICE laboratory, which focus on social robotics and autonomous systems for disaster response. His research includes cultural competence in robot-human interaction, cognitive systems, and assistive robotics.

Tuesday, February 11th – h. 11:15 – 13:00

The lecture will discuss what makes a robot or system autonomous and highlight challenges occurring when such systems get interacting with humans. It presents and discusses how biomechanical and psychological aspects can be considered in engineering design to improve functionality and user experience. Analyzing design methodology and human-in-the-loop approaches, emphasis is put on shaping human-robot interaction in an intuitive and safe fashion.

Philipp Beckerle is Dr.-Ing. in mechatronics from TU Darmstadt, Germany, in 2014 and his habilitation from TU Dortmund, Germany, in 2021. He is full professor and chair of Autonomous Systems and Mechatronics at FAU Erlangen-Nürnberg (www.asm.tf.fau.de) and was visiting researcher at Vrije Universiteit Brussel, Arizona State University, and University of Siena. His research interest is in human-centered mechatronics and robotics and his work was honored with various awards.

Wednesday, February 12th – h. 9:15 – 11:00

This lecture will introduce and discuss how the investigation of human factors in terms of mental and neural processes can improve the design of human-technology systems, with special attention to scenarios involving robotic devices, physiological computing, and virtual and augmented settings for improving the user experience, well-being, and the overall system performance.

Giacinto Barresi is a Professor of Robotics (with background in human factors) at the Bristol Robotics Laboratory, School of Engineering, CATE, UWE Bristol (United Kingdom), where he works on intelligent and interactive technologies to power human-robot systems for biomedical applications. He previously worked at the Istituto Italiano di Tecnologia in Genoa (Italy), and he was visiting researcher at the Open University in Milton Keynes (United Kingdom), at the Indian Institute of Technology Gandhinagar (India), and at the Kyushu University in Fukuoka (Japan).

Wednesday, February 12th – h. 11:15 – 13:00

Alessandro Carfì is a robotics researcher and assistant professor at the University of Genoa, specializing in artificial intelligence for human-robot interaction. He holds a PhD in Robotics Engineering and has extensive experience in AI-driven systems, wearable sensors, and mixed reality interfaces. His research integrates machine learning, and cognitive architectures to enhance robotic autonomy and human collaboration. Alessandro has participated in multiple funded projects, supervised MSc and PhD students, and contributed to international research initiatives in robotics and AI.

Thursday, February 13th – h. 9:15 – 11:00

We will introduce the main concepts of Virtual, Augmented and eXtended Reality (VR/AR/XR), focusing on the differences and analogies among the different techniques.

Specifically, we will analyze the main hardware and software features of the modern devices to convey virtual and augmented reality environments to a user: immersive and non-immersive VR, head-mounted displays, video see-trough and optical see-trough AR, ending with the most recent XR devices.

We will also discuss the main limitations of such devices, with respect to the features of our visual system, and the techniques to be adopted to mitigate such issue.

Finally, we will present several practical examples, with the aim of discussing the various possibilities offered by VR/AR and MR, also with respect to the possible future opportunities of the Metaverse.

Manuela Chessa is Associate Professor in Computer Science at University of Genoa, Italy, where she is Principal Investigator of the Perception&Interaction Lab@DIBRIS. She has a PhD in Bioengineering, and since 2005, her research activities have focused on the study of biological and artificial vision systems, the development of bioinspired models, natural human-machine interfaces based on virtual, augmented, and extended reality, and the perceptual and cognitive aspects of interaction in VR, AR, and XR. She is involved in several national and international research projects addressing the development and use of XR technologies in healthcare, rehabilitation, and training. She is teaching the CAIVARS and HealthXR Tutorials at IEEE ISMAR and IEEE VR.

Thursday, February 13th – h. 9:15 – 11:00

We will introduce the main concepts of Virtual, Augmented and eXtended Reality (VR/AR/XR), focusing on the differences and analogies among the different techniques.

Specifically, we will analyze the main hardware and software features of the modern devices to convey virtual and augmented reality environments to a user: immersive and non-immersive VR, head-mounted displays, video see-trough and optical see-trough AR, ending with the most recent XR devices.

We will also discuss the main limitations of such devices, with respect to the features of our visual system, and the techniques to be adopted to mitigate such issue.

Finally, we will present several practical examples, with the aim of discussing the various possibilities offered by VR/AR and MR, also with respect to the possible future opportunities of the Metaverse.

Fabio Solari is associate professor with the Department of Informatics, Bioengineering, Robotics, and Systems Engineering, at the University of Genoa, Italy. His research interests include computational models of visual perception, the design of bio-inspired artificial vision algorithms, the study of perceptual effects of virtual and augmented reality systems, and the development of natural human-computer interactions in VR, AR, MR, and extended reality.

Thursday, February 13th – h. 11:15 – 13:00

Heart failure (HF) is a chronic condition affecting over 64 million people worldwide and is the leading cause of hospitalization in individuals over 65 years old. Early detection of fluid retention is critical for preventing decompensation and reducing hospital admissions. Wearable devices offer a promising solution by enabling continuous, real-time monitoring outside clinical settings. This lecture presents a comprehensive workflow for data acquisition, transmission, and visualization in wearable systems, using the development of a bioimpedance-based HF monitoring device as a case study. The system continuously measures bioimpedance in the calf—a key indicator of fluid retention—using a low-power embedded platform with the AD5941 chip. The raw impedance data undergoes preprocessing and calibration directly on the device before being transmitted via a Nordic microcontroller to an Android application. The mobile app serves as an intermediary, formatting and securely transmitting the data to a private server running InfluxDB for time-series storage. Finally, Grafana generates real-time dashboards, allowing physicians to monitor trends remotely and make timely clinical decisions.

Santiago F. Scagliusi is an electronic engineer and researcher at the University of Seville. His work focuses on wearable health technology, specifically bioimpedance monitoring for heart failure patients.

Monday, February 17th – h. 9:30 – 11:00

Electromyography (EMG) is a powerful tool for assessing muscle activity, but extracting meaningful information from raw signals requires advanced data analysis techniques. This work explores various approaches for processing EMG signals, highlighting methods to enhance signal interpretation, improve feature extraction, and enable applications in biomechanics, rehabilitation, and human-computer interaction.

Maura Casadio is a Full Professor of Biomedical Engineering at the University of Genoa, Italy. Her research focuses on neural control of movement and forces, rehabilitation, biomedical robotics, sensory processing, artificial and augmented sensing (proprioception and somatosensation), and body–machine interfaces.

Monday, February 17th – h. 9:30 – 11:00

Electromyography (EMG) is a powerful tool for assessing muscle activity, but extracting meaningful information from raw signals requires advanced data analysis techniques. This work explores various approaches for processing EMG signals, highlighting methods to enhance signal interpretation, improve feature extraction, and enable applications in biomechanics, rehabilitation, and human-computer interaction.

Camilla Pierella is an Assistant Professor at the University of Genova in the Department of Informatics, Bioengineering, Robotics and Systems Engineering where she is carrying out her research activities related with neural control of movement and motor adaptation in healthy, athletic and neurological conditions.

Restoring the sense of touch through artificial systems remains a fundamental challenge in prosthetics. This lecture focuses on human-in-the-loop haptic systems that integrate materials, signal processing, and sensory feedback mechanisms. We discuss the challenges of translating tactile sensor data into meaningful information for humans, using upper limb prosthetics as a case study.

Lucia Seminara holds a PhD in Physics from EPFL (CH), she is an Associate Professor of Electronic Engineering at UNIGE. She develops haptic systems to restore touch. Passionate about fostering intuitive communication between haptic devices and humans, she leads TACTA, uniting researchers and artists for transdisciplinary research on touch.

Thursday, February 13th – h. 9:30 – 11:00

“Genetic Glitch” is a wearable biotechnological art project that visualizes the unique genetic code of the wearer as a living, dynamic display. This bio-art piece integrates synthetic biology, responsive materials, and generative visuals to create a wearable that expresses real-time mutations, ancestral lineage, or personalized biofeedback through aesthetic transformations. The lecture presents key historical references to bio-art projects that have influenced wearable biotechnological concepts, particularly in DNA art, synthetic biology, and living materials. (Richard Kitta / CORE Labs / Faculty of Arts TUKE)

Richard Kitta (1979, SK) is a multimedia artist, theorist, and university professor. He leads “glocal” art projects like DIG (Digital Intervention Group), MAO (Media Art Office), Creative Playgrounds, ENTER, and CORE Labs. With the DIG platform in the prestigious EDASN project with Ars Electronica, Linz (Austria), he contributed to the City of Košice being named the UNESCO Creative City of Media Arts in 2017.

Over the past 20 years, human-robot interaction has evolved significantly, transitioning from basic terminal commands using a keyboard and mouse to advanced wearable interfaces, gesture recognition systems, and AI-driven interactions.

Typically, feedback in human-robot interaction is conveyed through visual interfaces (such as GUI or VR/AR) or physical interfaces (e.g., haptic feedback and biosensors). However, the auditory domain remains largely unexplored, with one of its most common applications being sonar-based parking assistance in cars. Given that auditory information is processed significantly faster than visual input, sound-based feedback could offer a highly effective means of communication between humans and robots. This raises key questions:

OBJECTIVE

The goal is to guide a quadruped robot in real time using a wearable device equipped with an IMU sensor, while receiving complex audio feedback corresponding to the robot’s spatial position.

4E Studio is an interdisciplinary practice exploring the relationship between the human body and technology, led by Federica Sasso and Luca Pagan. Their work investigates the creation of new senses and perceptions, redefining human experience design through an innovative use of new technologies.

4E Studio is an interdisciplinary practice exploring the relationship between the human body and technology, led by Federica Sasso and Luca Pagan. Their work investigates the creation of new senses and perceptions, redefining human experience design through an innovative use of new technologies.

workshop #2 – Tuesday, February 18th – h. 11:15 – 13:00

SCENARIO: The evolution of gesture recognition over the years has had a growing impact on the field of robotics, significantly improving human-robot interaction across various applications. This technology is particularly valuable in industrial collaboration and the medical field, such as rehabilitation. Through gesture recognition, robots can understand human needs and intentions, allowing them to respond effectively to different requirements.

Gesture recognition relies on data acquisition through sensors. Various types of sensors can be used for this purpose, including wearable sensors like Inertial Measurement Units (IMUs) and non-wearable sensors like cameras. Wearable sensors offer advantages such as tracking motion trajectories, velocity, and acceleration without suffering from issues like occlusion, which often affect non-wearable sensors.

The data collected from these sensors, when processed with appropriate machine learning and deep learning techniques trained on gesture datasets, enable real-time gesture recognition for robot interaction.

OBJECTIVE: In this challenge, students will use an existing dataset collected through wearable sensors to implement a gesture recognition model. They will then evaluate the model’s performance by testing its accuracy using validation methods.

Workshop #2 – Tuesday, February 18th – h. 11:15 – 13:00

SCENARIO:

The evolution of gesture recognition over the years has had a growing impact on the field of robotics, significantly improving human-robot interaction across various applications.

This technology is particularly valuable in industrial collaboration and the medical field, such as rehabilitation.

Through gesture recognition, robots can understand human needs and intentions, allowing them to respond effectively to different requirements.

Gesture recognition relies on data acquisition through sensors. Various types of sensors can be used for this purpose, including wearable sensors like Inertial Measurement Units (IMUs) and non-wearable sensors like cameras.

Wearable sensors offer advantages such as tracking motion trajectories, velocity, and acceleration without suffering from issues like occlusion, which often affect non-wearable sensors.

The data collected from these sensors, when processed with appropriate machine learning and deep learning techniques trained on gesture datasets, enable real-time gesture recognition for robot interaction.

OBJECTIVE: In this challenge, students will use an existing dataset collected through wearable sensors to implement a gesture recognition model. They will then evaluate the model’s performance by testing its accuracy using validation methods.

Workshop #3 – Wednesday, February 19th – h. 11:15 – 13:00

Challange #2 : Sound-feedback in human-quadruped robot interaction

in collaboration with 4E Studio.

SCENARIO

Over the past 20 years, human-robot interaction has evolved significantly, transitioning from basic terminal commands using a keyboard and mouse to advanced wearable interfaces, gesture recognition systems, and AI-driven interactions.

Typically, feedback in human-robot interaction is conveyed through visual interfaces (such as GUI or VR/AR) or physical interfaces (e.g., haptic feedback and biosensors).

However, the auditory domain remains largely unexplored, with one of its most common applications being sonar-based parking assistance in cars. Given that auditory information is processed significantly faster than visual input, sound-based feedback could offer a highly effective means of communication between humans and robots. This raises key questions:

OBJECTIVE

The goal is to guide a quadruped robot in real time using a wearable device equipped with an IMU sensor, while receiving complex audio feedback corresponding to the robot’s spatial position.

Valerio Belcamino is a Ph.D. candidate in Robotics at the University of Genoa. He holds a Bachelor’s and Master’s in Computer Engineering from Politecnico di Torino. His research focuses on human motion analysis and activity recognition. He has worked at JAIST (Japan) and has experience in teaching AI and robotics.

Workshop #1 – Monday, February 17th – h. 11:15 – 13:00

Workshop #1 – Monday, February 17th – h. 11:15 – 13:00

Dr. Omar Eldardeer is a Postdoctoral Researcher at the COgNiTive Architecture for Collaborative Technologies (CONTACT) unit of the Italian Institute of Technology. His research focuses on building cognitive architectures for perception, learning, and interaction for robots with the human in the loop. Typically in interaction or collaboration scenarios.

He earned his B.Eng. in Electrical Energy Engineering from Cairo University, Egypt. Followed by an M.Sc. in Artificial Intelligence and Robotics from the University of Essex, UK. He then obtained his Ph.D. in Robotics from the University of Genova and the Italian Institute of Technology, where he explored audio-visual cognitive architectures for human-robot shared perception.

During his Ph.D. and postdoctoral research, Dr. Eldardeer contributed to VOJEXT and APRIL EU projects and did research periods at the University of Essex, the University of Lethbridge, and the University of Bremen.

This talk will present recent advances at the Artificial and Mechanical Intelligence Laboratory (AMI) in leveraging wearable devices for two key objectives: (i) robotic avatars and (ii) physical human-robot interaction via whole-body controllers. The presentation will take a high-level approach, emphasizing applications, while also covering the underlying methodologies and algorithms to ensure a self-contained discussion. Given time constraints, the talk will not be as comprehensive as the speaker would prefer. However, effort will be made to provide the audience with essential references for further reading on the topics discussed.

Wearables in Robotic Avatar Systems The talk will cover research on the teleoperation of robotic avatars conducted by the speaker and the research group over a six-year period. It will provide a historical perspective on the evolution of teleoperation infrastructure, from its early, simplified form in [Intellisys] to its development and maturation at AMI, culminating in participation in the ANA XPrize and a subsequent publication in Science Robotics [Science]. Key aspects of inverse kinematics and geometric retargeting will be briefly discussed. Finally, case studies will be presented where robots with different morphologies are controlled remotely, such as teleoperating the Honda avatar robot in Saitama from our lab in Genoa.

Wearables in Physical Human-Robot Interaction (pHRI) Robots, particularly humanoids, are increasingly envisioned as collaborators in human-centric environments such as warehouses and hospitals. This necessitates the development of whole-body controllers capable of accounting for human partners in shared tasks. In this part of the lecture, we focus on research conducted at the Artificial and Mechanical Intelligence Laboratory to address a fundamental question: How can we design controllers and models that enable a robot to collaborate effectively with a human partner in performing a given task? Given the broad scope of this question, we narrow our discussion to the benchmark collaborative lifting task. We will explore the underlying control algorithms, dynamical models, and the crucial role of wearable sensors and technologies in facilitating human-robot cooperation. This part of the talk will be based on the results presented in [ICRA].

Mohamed Elobaid

Since 2022, Mohamed Elobaid has been a Postdoctoral Researcher with the Artificial and Mechanical Intelligence research line at IIT, focusing on robust locomotion, human-robot physical interaction, and teleoperation. I earned a Ph.D. in Systems and Control in 2022 with maximum distinction through a joint program (cotutelle) between Sapienza University of Rome and the University of Paris-Saclay. Prior to that, I obtained an M.Sc. in Control Engineering from Sapienza University of Rome in 2017 (con lode). From 2012 to 2015, I worked as an Automation Engineer in Sudan. I hold a B.Sc. in Electrical and Electronics Engineering from the University of Khartoum (2011).

Since 2022, Mohamed Elobaid has been a Postdoctoral Researcher with the Artificial and Mechanical Intelligence research line at IIT, focusing on robust locomotion, human-robot physical interaction, and teleoperation. I earned a Ph.D. in Systems and Control in 2022 with maximum distinction through a joint program (cotutelle) between Sapienza University of Rome and the University of Paris-Saclay. Prior to that, I obtained an M.Sc. in Control Engineering from Sapienza University of Rome in 2017 (con lode). From 2012 to 2015, I worked as an Automation Engineer in Sudan. I hold a B.Sc. in Electrical and Electronics Engineering from the University of Khartoum (2011).